Our customer success team recently released our Customer Central portal, which provides a wealth of material to aid in customer success. The project has been under way for some time, but its release got me thinking about the future of the SaaS application experience.

Much of the discussion around the user experience that a software vendor provides is focused on the technology itself. This is very much a key aspect of the experience a user has with a software application, but it's very much not the only aspect of that experience.

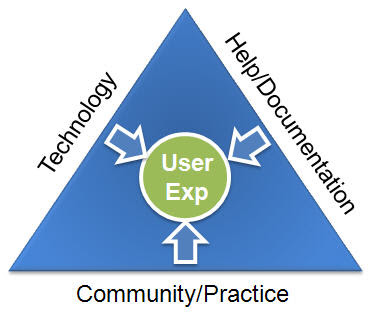

There are three main areas of the overall experience a user has with software:

The Software Application: the product itself, and the aspects of the technology and user interface that guide a user to better understand and use it

Help and Documentation: the explicit knowledge that surrounds the application and provides instructions on its use. Whether in a help center, support portal, or knowledge-base, this documentation is generally application specific and task focused.

Community and Practice Information: the implicit knowledge of how an application can best be used to achieve a business goal. Often this information is shared through peers who have gained experience with using the application to tackle a business objective, and embodies a broader scope, including business, process, and political issues likely to be encountered.

Why bring this up?

Because I suspect we are at an interesting inflection point in the market after which these three experiences will no longer be separate.

Currently, these three main areas of experience with an application are separate. Software vendors provide the application experience, and whereas they may use a variety of third party tools to do so, it is delivered as a relatively self-contained experience.

The documentation for a software application is, similarly, delivered as another experience. Very often, a third party provider of documentation software is used that provides the ability to store and present information, and allows users to search for information in a variety of ways.

Community and best practice information is delivered in a much less standard way. Sometimes it is delivered through a single portal interface that manages a vibrant community, but often it is an amalgamation of a variety of sources, some company sponsored, and some user driven, where best practice approaches are shared.

There are a few interesting trends in each of these that makes me think that within a few short years we will be dealing with a single user experience across all three.

First, SaaS technologies, unlike installed software, easily allow integration of the experiences of the software application, and the help documentation. As both the software application and the documentation libraries are now built to be deployed in the cloud, it is a simple exercise in software engineering to embed the help documentation directly into the application experience.

First, SaaS technologies, unlike installed software, easily allow integration of the experiences of the software application, and the help documentation. As both the software application and the documentation libraries are now built to be deployed in the cloud, it is a simple exercise in software engineering to embed the help documentation directly into the application experience.

Today's documentation systems are rapidly evolving to better support this approach, as it allows contextual access to key information, and thus greatly improves the users experience with the application.

Secondly, there is a significant evolution in both community software and help documentation software to move their experiences closer together. Idea exchanges, discussion forums, and collaborative voting on enhancements, all allow the viewers of once-passive documentation forums to interact with one another and exchange ideas.

Thirdly, applications themselves are beginnning to enable the sharing of best practice approaches. In demand generation and B2B marketing, the best automation programs for event management, free trial optimization, data management, lead routing, or lead scoring are being shared among marketers within, and even between, organizations.

As these transitions take place, we are evolving towards a user experience that combines all three, for the benefit of the marketer. As an example, a marketer looking to build a lead nurturing campaign should be able to, from a single experience:

- compare notes with other marketers who have tackled similar business challenges

- download sample nurturing campaigns as potential starting points

- understand the precise functionality of a particular software feature being used

- build and deploy their own lead nurturing campaign

Today's marketers, when evaluating software offerings, are increasingly pushing the evaluation envelope of demand generation software beyond the software itself. In its early days, SaaS software shifted an element of the burden to achieve success back towards the software vendor through a pricing model that was recurring, not upfront. It has been a very healthy shift for the industry and clients. Now, as marketers increasingly push to evaluate how each vendor will provide an overall, wholistic experience that allows them to achieve success, we will see this shift continue.

Software continually evolves, and at each evolutionary step, disparate parts of the software stack become seamlessly integrated for the benefit of the user. I suspect that one of the next evolutions we are about to see is the integration of the application, the documentation, and the community into a single, seamless experience for the user. I, for one, am very much looking forward to it.

I look forward to your comments. Is this a shift you can see happening? Are you beginning to evaluate software in general, and demand generation software specifically on its overall experience and path to success?

Read More...